Knowledge graphs, with their ability to represent interconnected information, remain a powerful tool for understanding complex data. While much of the hype in AI has shifted to other areas, the potential of knowledge graphs, especially when combined with the capabilities of modern LLMs, remains largely untapped. This blog post revisits an experiment I conducted in early 2023, using GPT-3.5 to explore the early stages of automated knowledge graph generation.

Background: The Allure of RDF and Semantic Networks

Before diving into the details of my experiment, it's essential to understand the foundational concepts that inspired it. My exploration into automated knowledge graph creation was heavily influenced by the principles of the Resource Description Framework (RDF) and the related concept of semantic networks. These approaches offer powerful ways to structure and encode meaning in a way that's both human-readable and machine-interpretable.

RDF uses a simple yet elegant structure called a "triple" to represent relationships between entities. Each triple consists of a Subject, a Predicate, and an Object. The predicate describes the relationship between the subject and the object.

This structure allows us to break down complex information into elementary units, making it easier to process and reason with. For example, "A whale is a mammal" can be represented as the triple: :Whale :is_a :Mammal. This triple format allows us to express knowledge in a concise, standardized way.

Semantic networks provide a visual representation of these relationships. They depict knowledge as a graph, where nodes represent concepts, and directed edges (arrows) represent the relationships between them. The direction of the arrow is crucial, as it indicates the specific nature of the relationship.

The image above illustrates a simple semantic network. You can see how the arrows specify the direction of the relationships (e.g., "Cat has Fur," but "Fur" does not "have" "Cat"). This visual representation clarifies how semantic networks, and by extension, RDF triples, capture the nuances of meaning between connected entities.

It was this elegant combination of structured representation (RDF triples) and visual clarity (semantic networks) that sparked the idea for my experiment. I wanted to see if GPT-3.5 could generate these triples, effectively constructing a rudimentary knowledge graph from a simple text prompt.

The Experiment: GPT-3.5 and the Automated Generation of Knowledge Graphs

The core objective of my experiment was to determine whether GPT-3.5, a leading LLM in early 2023, could automatically generate knowledge graphs from user-provided text prompts. I envisioned a tool that would allow users to enter any topic of interest and receive a visual representation of the key concepts and their relationships within that domain. This "topic discovery tool", as I called it, held the promise of making learning and exploration more efficient and engaging.

The technical implementation involved using the OpenAI GPT-3.5 API. I designed the application to accept a user-defined topic as a text parameter. This input would then be used to craft a prompt for GPT-3.5, asking it to identify related concepts and their relationships. The API would return a text response, which I then processed to extract the key entities and relationships. Crucially, these extracted relationships were encoded as JSON objects representing RDF triples — subject, predicate, and object — allowing me to capture the directionality of the connections.

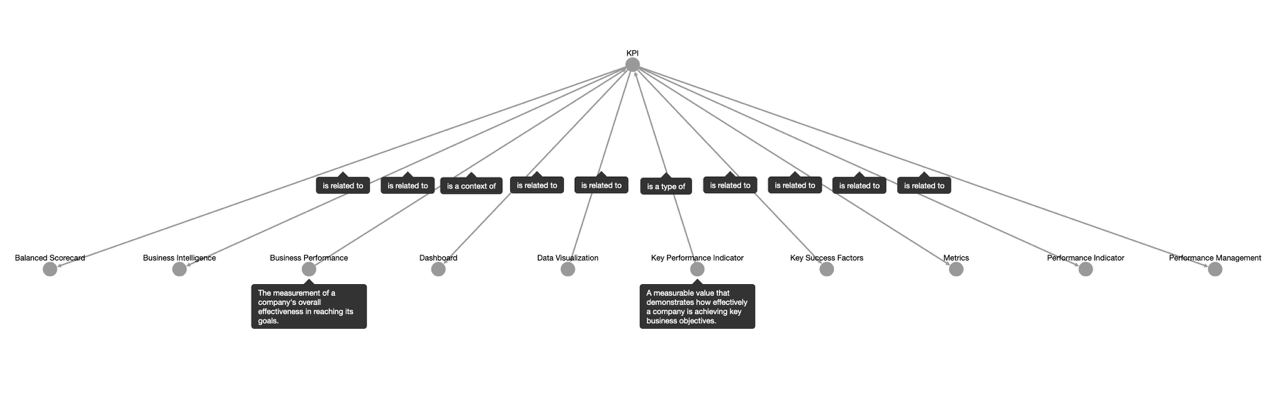

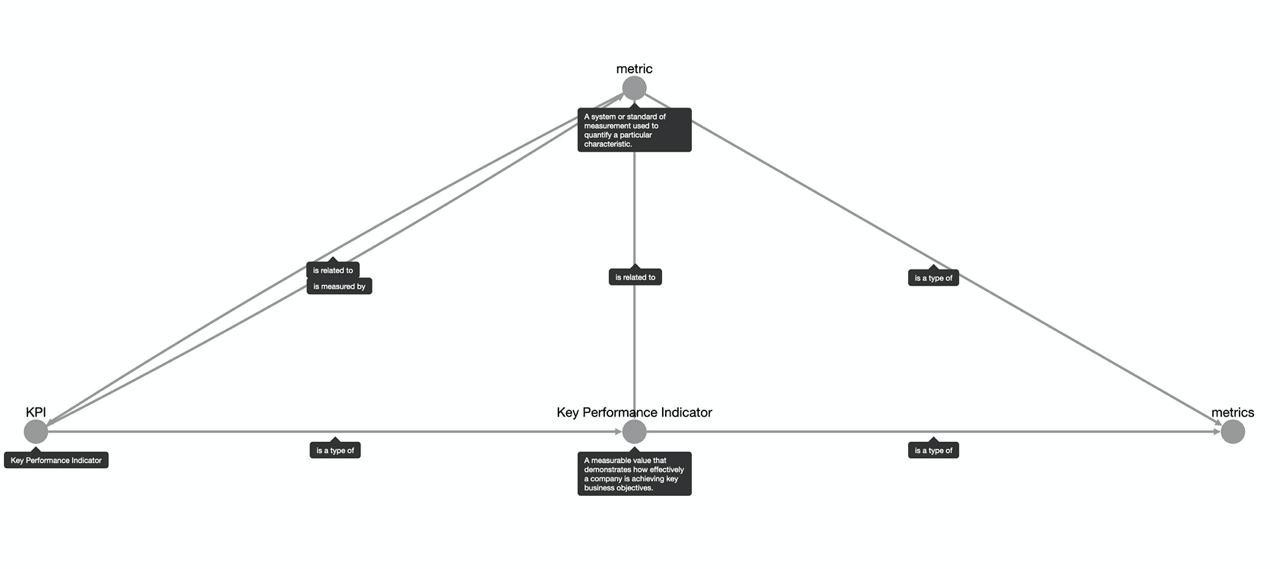

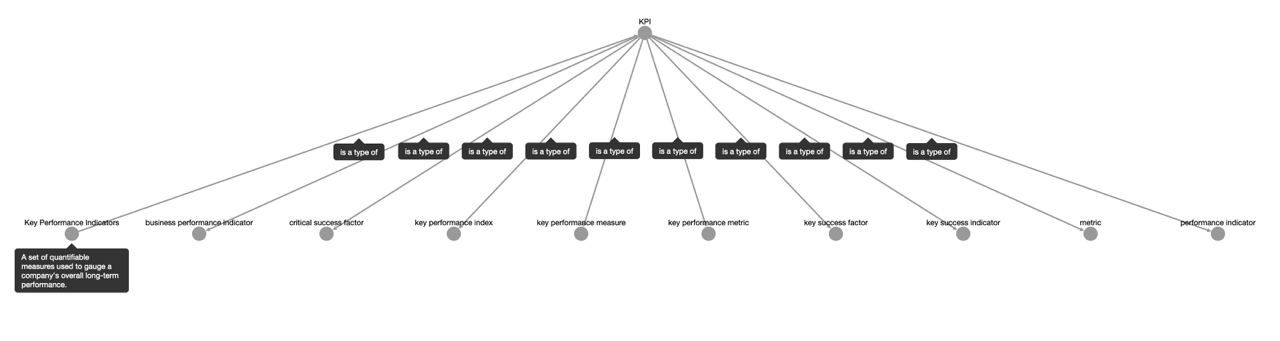

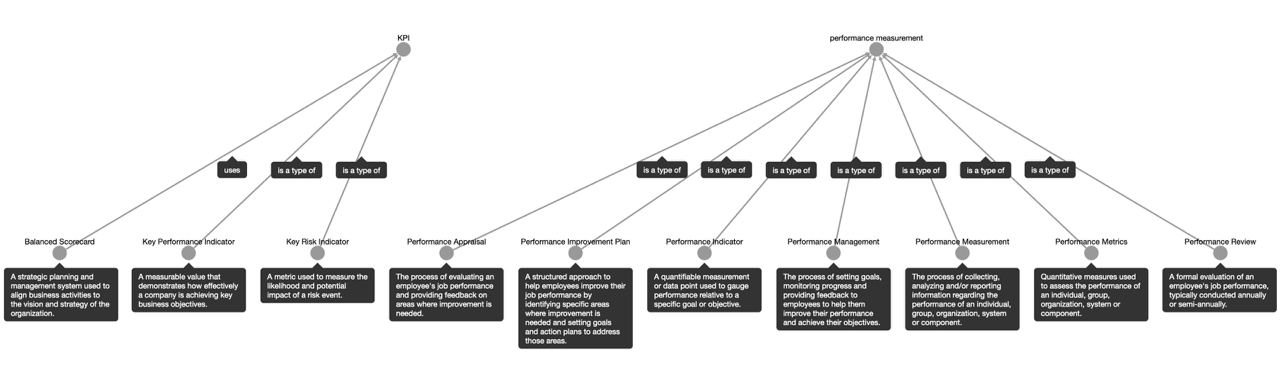

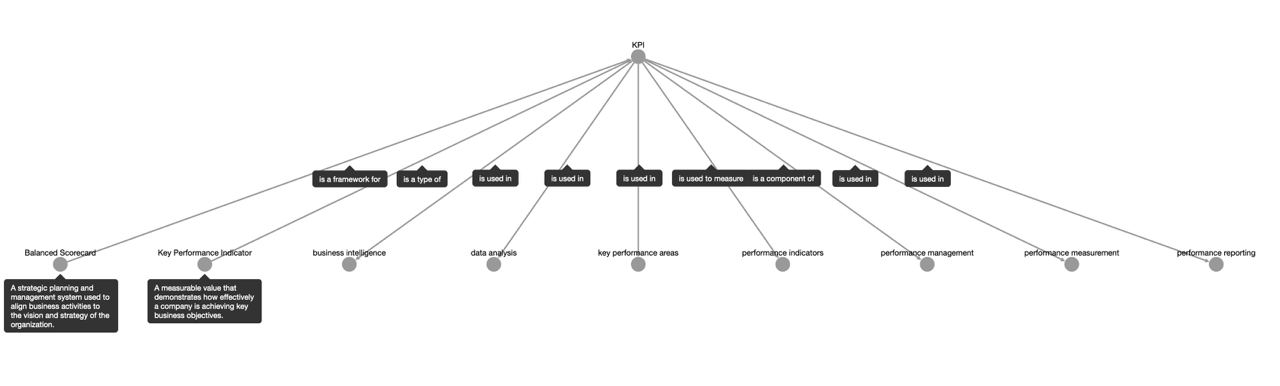

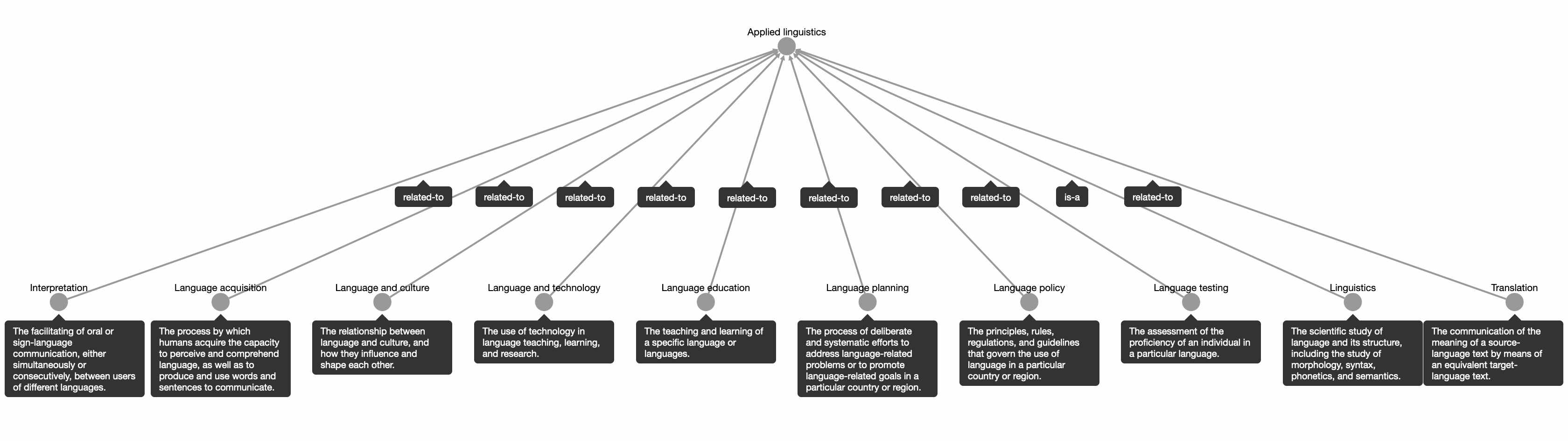

To test the application's capabilities, I initially used two distinct topics: "Key Performance Indicators (KPIs)" and "Applied Linguistics." These domains, with their relatively well-defined concepts and relationships, provided a good starting point for evaluating GPT-3.5's performance.

The images above show some of the knowledge graphs generated for "KPIs." You can see how the model identified related concepts like "Balanced Scorecard," "Business Intelligence," and various types of performance indicators. The connections between these concepts, represented by labeled edges, illustrate the relationships extracted by GPT-3.5.

Similarly, the image above displays a knowledge graph generated for "Applied Linguistics," demonstrating the tool's ability to handle a completely different domain. It successfully identified related areas like "Interpretation," "Language Acquisition," and "Linguistics," connecting them with relevant relationships.

GPT-3.5 demonstrated a remarkable ability to process user-defined topics and generate visually appealing knowledge graphs. The generated graphs, especially for the test cases of "Key Performance Indicators" and "Applied Linguistics," appeared surprisingly coherent. The model successfully identified relevant concepts and connected them with relationships that, on the surface, seemed to capture the essence of each domain. Importantly, GPT-3.5 correctly generated triples, complete with predicates indicating the directionality of relationships, aligning with the principles of RDF and semantic networks. This initial success hinted at the exciting potential of using LLMs for automated knowledge representation and exploration.

Limitations: Context Window, Complexity, and UI Challenges

While the initial results were encouraging, my experiment with GPT-3.5 also revealed some important limitations. These constraints stemmed primarily from the technical characteristics of the model at the time and the specific design choices I made for the application.

The 2K Token Context Window: A key constraint was the limited context window of GPT-3.5 in early 2023. The model could only process a certain amount of text (2049 tokens) in a single prompt. This directly impacted the complexity and comprehensiveness of the generated knowledge graphs. Longer, more nuanced prompts, designed to elicit a wider range of concepts and relationships, would often exceed this limit. As a result, the generated graphs sometimes provided only a limited snapshot of a topic, missing potentially important connections and deeper levels of detail.

Simplicity by Design: Given the context window limitation and the focus on rapid topic exploration, I prioritized generating smaller, more manageable graphs. This design choice favored speed and ease of understanding over capturing the full complexity of a domain. The resulting graphs, while useful for getting a quick overview of a topic, were intentionally simplified, and therefore didn't represent the full depth of knowledge available.

UI Challenges for Deeper Exploration: Another limitation was the user interface. My initial implementation provided basic visualization of the generated graphs, but it lacked the functionality for dynamic exploration. I had envisioned a user experience where people could expand individual nodes to reveal subtopics and further levels of detail, creating a more interactive and in-depth exploration process. However, implementing this kind of dynamic graph interaction proved challenging within the scope of my initial experiment. The complexity of real-time graph manipulation and visualization required a more sophisticated UI design and development approach, which I deferred for future iterations.

Practical Applications: Potential Use Cases

Despite the limitations discussed in the previous section, my GPT-3.5 experiment revealed several potential use cases for this type of technology, even in its early 2023 form.

-

Brainstorming and Idea Generation: The tool could be valuable for brainstorming sessions. Generating a quick knowledge graph on a topic could spark new ideas and connections, even if the graph isn't completely comprehensive or perfectly accurate. The visual representation of related concepts could serve as a catalyst for creative thinking and exploration.

-

Preliminary Knowledge Mapping: When examining a new subject area, the tool could provide a preliminary map of the key concepts and their relationships. This initial overview, while simplified, could help learners get a basic understanding of the domain's structure and identify areas for further investigation. It's essential, however, to treat this initial map as a starting point, not a definitive representation.

-

Educational Demonstrations: The tool could be used in educational settings to illustrate the principles of knowledge graphs and semantic networks. It offers a practical way to visualize how concepts are interconnected and how meaning is encoded through relationships. However, educators should emphasize that the generated graphs are simplified examples and may not be entirely accurate or complete.

-

Content Overview: For users seeking a quick, high-level overview of a topic, the tool could offer a convenient way to visualize related concepts. However, it's paramount to emphasize that this overview should be treated as a starting point for further research, not a replacement for in-depth study or consultation with reliable sources.

Important Considerations:

In all these potential use cases, it's essential to treat the generated knowledge graphs with caution. They should be considered preliminary, potentially incomplete, and subject to verification. Human oversight, critical thinking, and cross-referencing with trusted sources are crucial for responsible use. The tool, in its early 2023 form, was best viewed as a supplementary aid for exploration and idea generation, not a replacement for rigorous research or expert knowledge.

Reflections and Future Directions: The Evolving Landscape of Knowledge Graphs and LLMs

Looking back at this experiment from the vantage point of December 2024, it's striking how much the field of AI has progressed. In early 2023, GPT-3.5 was considered state-of-the-art, yet it faced limitations in context window and reasoning capabilities that constrained the complexity and accuracy of the knowledge graphs it could generate.

Today, more advanced LLMs like GPT-4, Claude, and Gemini, offer significant improvements in these areas. While I haven't replicated the experiment with these newer models, their enhanced capabilities suggest exciting possibilities for the future of automated knowledge graph creation. We can imagine future tools that generate far more comprehensive and nuanced graphs, overcoming the limitations encountered in my initial exploration.

Several promising research directions are worth exploring:

-

Enhanced Accuracy and Reasoning: Newer LLMs offer improved factual accuracy and more sophisticated reasoning abilities. This could translate to more reliable relationships in the generated graphs, requiring less human intervention for correction and validation.

-

Larger Context Windows: The increased context windows of newer models would allow for more complex and detailed prompts, enabling the generation of richer and more comprehensive knowledge graphs.

-

Automated Fact Verification: Integrating automated fact-checking mechanisms could significantly enhance the reliability of AI-generated knowledge graphs. Cross-referencing with trusted sources could help identify and correct inaccuracies, making the graphs more trustworthy for various applications.

-

Advanced Graph Visualization and Interaction: Developing more sophisticated user interfaces for interacting with complex knowledge graphs remains a crucial area for future work. Dynamic exploration, filtering, and visualization tools would enhance the user experience and unlock the full potential of these rich representations of knowledge.

The journey of exploring automated knowledge graph creation is far from over. While my experiment with GPT-3.5 offered a glimpse into the potential and the challenges, the rapid evolution of AI promises even more exciting discoveries in the years to come.